Amit Roy-Chowdhury, a professor of electrical and computer engineering at UC Riverside’s Marlan and Rosemary Bourns College of Engineering, is leading a team that has received a grant totaling almost $1 million from the Defense Advanced Research Projects Agency, or DARPA, to understand the vulnerability of computer vision systems to adversarial attacks. The project is part of the Machine Vision Disruption program, which is part of DARPA’s AI Explorations program. The results could have broad applications in autonomous vehicles, surveillance, and national defense.

Team members include UC Riverside colleagues Srikanth Krishnamurthy, Chengyu Song, Salman Asif, and Xerox-affiliated company PARC as an outside collaborator.

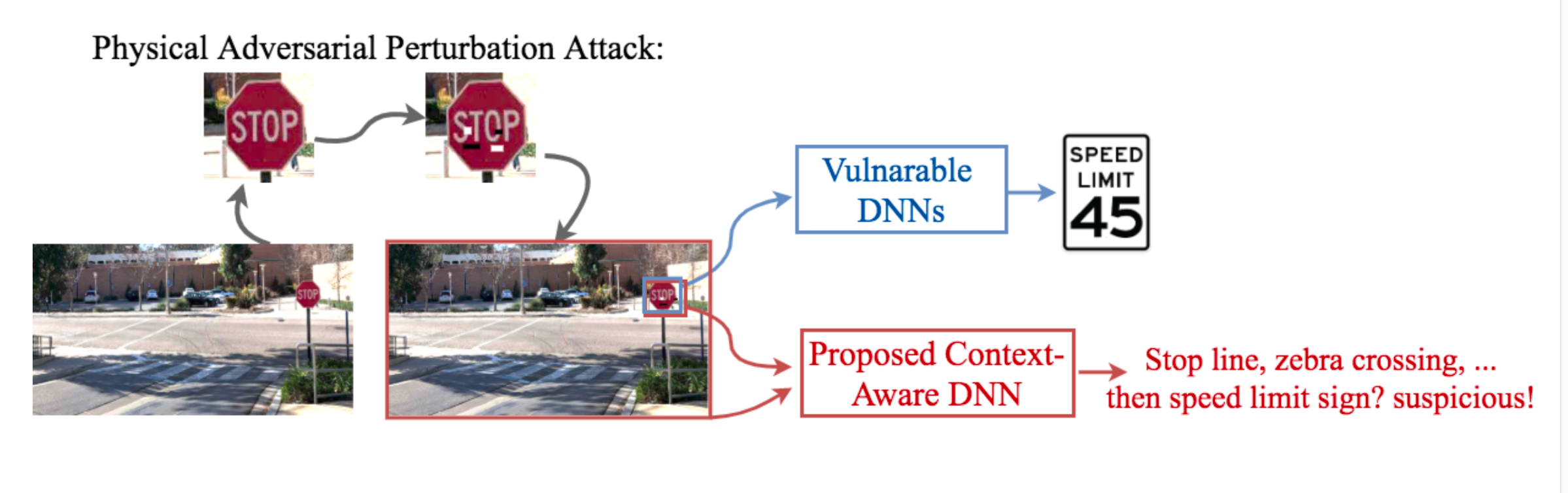

When humans look at an object they also notice the entire scene around it. This wider visual context makes it easy for them to spot and interpret irregularities. A human driver, noticing a sticker pasted onto a stop sign, knows the sticker doesn’t change the meaning of the sign and will stop their car. However, an autonomous vehicle using deep neural networks for object recognition might not recognize the stop sign due to the sticker and plow through the intersection.

No matter how well trained computer algorithms are at recognizing variations of an object, image disturbances will always increase the likelihood of the computer making a bad decision or recommendation. Their vulnerability to image manipulation makes deep neural networks that process images an attractive target for malicious actors who want to disrupt decisions and activities mediated by visual artificial intelligence.

Roy-Chowdhury, Krishnamurthy, and Song recently published a paper in the European Conference in Computer Vision, a premier computer vision conference, which aims to teach computers what objects usually coexist near each other, so if one of them is altered or absent the computer will still make the right decision.

“If there is something out of place, it will trigger a defense mechanism,” Roy-Chowdhury said. “We can do this for perturbations of even just one part of an image, like a sticker pasted on a stop sign.”

To use another example, when people see a horse or a boat, they expect to see certain things around them, such as a barn or lake. If one of these images is disturbed — perhaps the horse is standing in a car dealership or the boat is floating in clouds — a person can tell that something is wrong. Roy-Chowdhury’s group hopes to bring this ability to computers.

To do this, they first need to determine what kinds of attacks are possible. The DARPA project will focus on generating adversarial attacks using visual context information, thus leading to a better understanding of machine vision system vulnerabilities.

“We will add perturbations to image systems to make computers give the wrong answers,” Roy-Chowdhury said. This can later lead to the design of defenses against the attacks.”