Every time you run a ChatGPT artificial intelligence query, you use up a little bit of an increasingly scarce resource: fresh water. Run some 20 to 50 queries and roughly a half liter, around 17 ounces, of fresh water from our overtaxed reservoirs is lost in the form of steam emissions.

Such are the findings of a University of California, Riverside, study that for the first time estimated the water footprint from running artificial intelligence, or AI, queries that rely on the cloud computations done in racks of servers in warehouse-sized data processing centers.

Google’s data centers in the U.S. alone consumed an estimated 12.7 billion liters of fresh water in 2021 to keep their servers cool -- at a time when droughts are exacerbating climate change -- Bourns College of Engineering researchers reported in the study, published online by the journal arXiv as a preprint. It is awaiting its peer review.

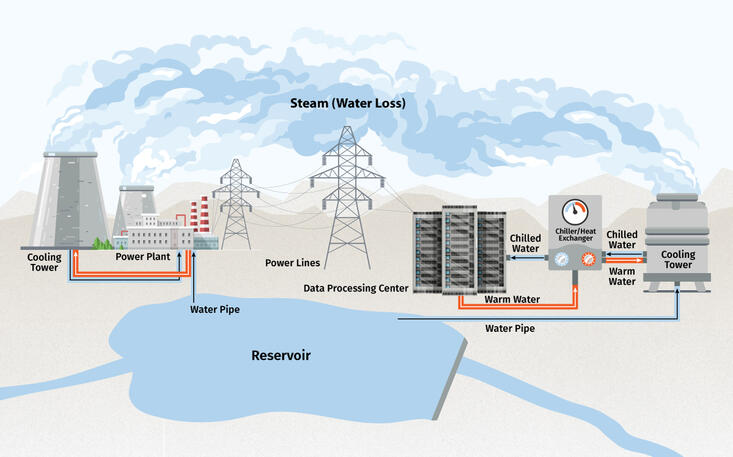

Shaolei Ren, an associate professor of electrical and computer engineering and the corresponding author of the study, explained that data processing centers consume great volumes of water in two ways.

First, these centers draw electricity from power plants that use large cooling towers that convert water into steam emitted into the atmosphere.

Second, the hundreds of thousands of servers at the data centers must be kept cool as electricity moving through semiconductors continuously generates heat. This requires cooling systems that, like power plants, are typically connected to cooling towers that consume water by converting it into steam.

“The cooling tower is an open loop, and that’s where the water will evaporate and remove the heat from the data center to the environment,” Ren said.

Ren said it is important to address the water use from AI because it is fast-growing segment of computer processing demands.

For example, a roughly two-week training for the GPT-3 AI program in Microsoft’s state-of-the-art U.S. data centers consumed about 700,000 liters of freshwater, about the same amount of water used in the manufacture of about 370 BMW cars or 320 Tesla electric vehicles, the paper said. The water consumption would have been tripled if training were done in Microsoft’s data centers in Asia, which are less efficient. Car manufacturing requires a series of washing processes to remove paint particle and residues, among several other water uses.

Ren and his co-authors — UCR graduate students Pengfei Li and Jianyi Yang, and Mohammad A. Islam of the University of Texas, Arlington — argue big tech should take responsibility and lead by example to reduce its water use.

Fortunately, AI training has scheduling flexibilities. Unlike web search or YouTube streaming that must be processed immediately, AI training can be done at almost any time of the day. To avoid wasteful water usage, a simple and effective solution is training AI models during cooler hours, when less water is lost to evaporation, Ren said.

“AI training is like a big very lawn and needs lots of water for cooling,” Ren said. “We don't want to water our lawns during the noon, so let's not water our AI (at) noon either.”

This may conflict with carbon-efficient scheduling that particularly likes to follow the sun for clean solar energy. “We can’t shift cooler weather to noon, but we can store solar energy, use it later, and still be `green’,” Ren said.

“It is truly a critical time to uncover and address the AI model’s secret water footprint amid the increasingly severe freshwater scarcity crisis, worsened extended droughts, and quickly aging public water infrastructure,” reads the paper, which is titled “Making AI Less ‘Thirsty:’ Uncovering and Addressing the Secret Water Footprint of AI Models.”